We need to talk about AI. …Now.

This is longer than intended. My apologies. If you adopt the ‘brace for impact’ position when anyone mentions AI perhaps you can skip this one. If you don’t, then perhaps you should read further.

It’s 2 am in the morning, you’ve played around with the parameter settings, you found some highly effective text prompts to produce high quality images, you even have one of your lovely hand sketches contributing to the mix… …the AI imager is hitting it out of the park, wonderous architectural image after wonderous architectural image. It’s like you have found a golden vein and you are mining away. The dopamine is flowing with each new suite of images, time is of no consequence. Since you upped the resolution and sampling steps its now taking almost 2 minutes to create a new suite of austere, desolate, gentle, stylised wonder, replete with delicate landscapes. You begin to think to yourself - “I am REALLY good at this!”. Subconsciously you are beginning to think “This is ALL me! These outputs would be nothing without my words and creative dna there in the mix!”.

The following morning, you awake bleary eyed wondering if it was all a dream. You check the AI folder. It’s all there. OMG, this is wild!

You fire half of your art department.

This was me a month ago after installing the freely available Stable Diffusion AI on my home workstation. As I am my own art department, I’m less fired and more like a new member of a borg hive mind (star trek reference). I was filled with enthusiasm and awe but with each second a growing chorus of uncomfortable questions and issues were gnawing at me and they continue by the day, getting closer to bone.

I dove in. This topic scratches two of my favourite itches - creativity (I design) and algorithms (I dabble with code). In the time between then and writing this I have boned up on machine learning, oppositional networks, the essential methodologies in generation and training of the networks and the current thinking about the broader applications of this technology (the alignment issue). Now I am roughly equal portions in awe and deeply concerned with a side order of panic.

We do need to talk about this now.

Point one, about my art department, about all my creative heros… if we could have chosen one group of skills not to be replaced by algorithms! For crying out loud why not just replace the politicians and media moguls first! Why oh why did we have to go here? This was not how I expected the robot uprising to evolve!

The answer is prosaic as always. It is an understandable evolution of teaching machines to recognise things we find useful; numbers, letters, cars, animals, faces… We have developed systems to take a 2d matrix of pixels and find the pattern we are looking for. To do this reliably requires an enormous training set of images with known content, tagged with tokens (text labels or similar useful semantic groupings), so that we tweak the network to get closer to the right result given a wide variety of input images. The training process is coupled with a second network which can determine if it has identified the item correctly or not (an easier job) and automatically tells it to tweak its parameter weightings in a particular direction until it is getting it right ‘enough’. Leave these two alone running on a computer and eventually you’ll have a trained network . A network more or less able to identify the pattern it is looking for in new pictures (yes, a simplification - look up ‘Generative Adversarial Networks’ if you are a nerd). Next grow this training exponentially now so you are training to identify millions of different things - great. Now train it to iteratively add noise to an identified image - great. Now reverse the process so it removes the noise, well done. Now give it nothing but noise and some text prompts to start with. You’ve trained it to identify the text prompts so it knows what should be there in the noise. Set it to work removing noise, maybe adding a little back then removing some more until it is happy that it has produced an image - kinda, mostly, on balance, matching your text prompts. The process of adding noise is referred to as Diffusion and the network model that creates “image from noise” is called a Diffusion model, hence “Stable Dffusion”. Except it doesn’t just add noise to a 2d image, it rolls up all the datapoints into a multi-dimensional abstract space and manipulates them there in what they call “latent space”. Goodbye puny humans!

It is important to understand the method is imperfect. It is not intelligent. It is a fantastic solution approximator. It has indeed captured a form of frozen understanding. But the network with its thousands/millions of parameters, automatically weighted connections and layers of nodes becomes a quintessential black box. Inscrutable, mysterious and yet so useful. It smells like danger to me. Tasty danger.

I know, in my bones the abilities of these AI networks will call many to question the validity of creative enterprise (if you want an AI with a artistic bent, look up mid-journey). The immediacy and surface level benefits will out-shout the concerns. People are largely opportunistic. If they see a short cut, they will take it and this is one hell of a short cut. They will utilise and plough these networks for output long before they pause one day to ask, “I wonder what this network was trained on?” shrug and move on to the next generation. Call me cynical, sure I’ll admit it, but do you think I’m wrong? I think its a matter of time before a client shows up with Ai generated imagery of their own as a brief, “We want you to make it look like this. We entered in a few buildings and architects we like as text prompts.” Is this a bad thing or just treading on our creative toes? Well I think it depends on a few things including our ability to communicate and educate our clients, but also on the network being used. Specifically how, and what it has been trained on.

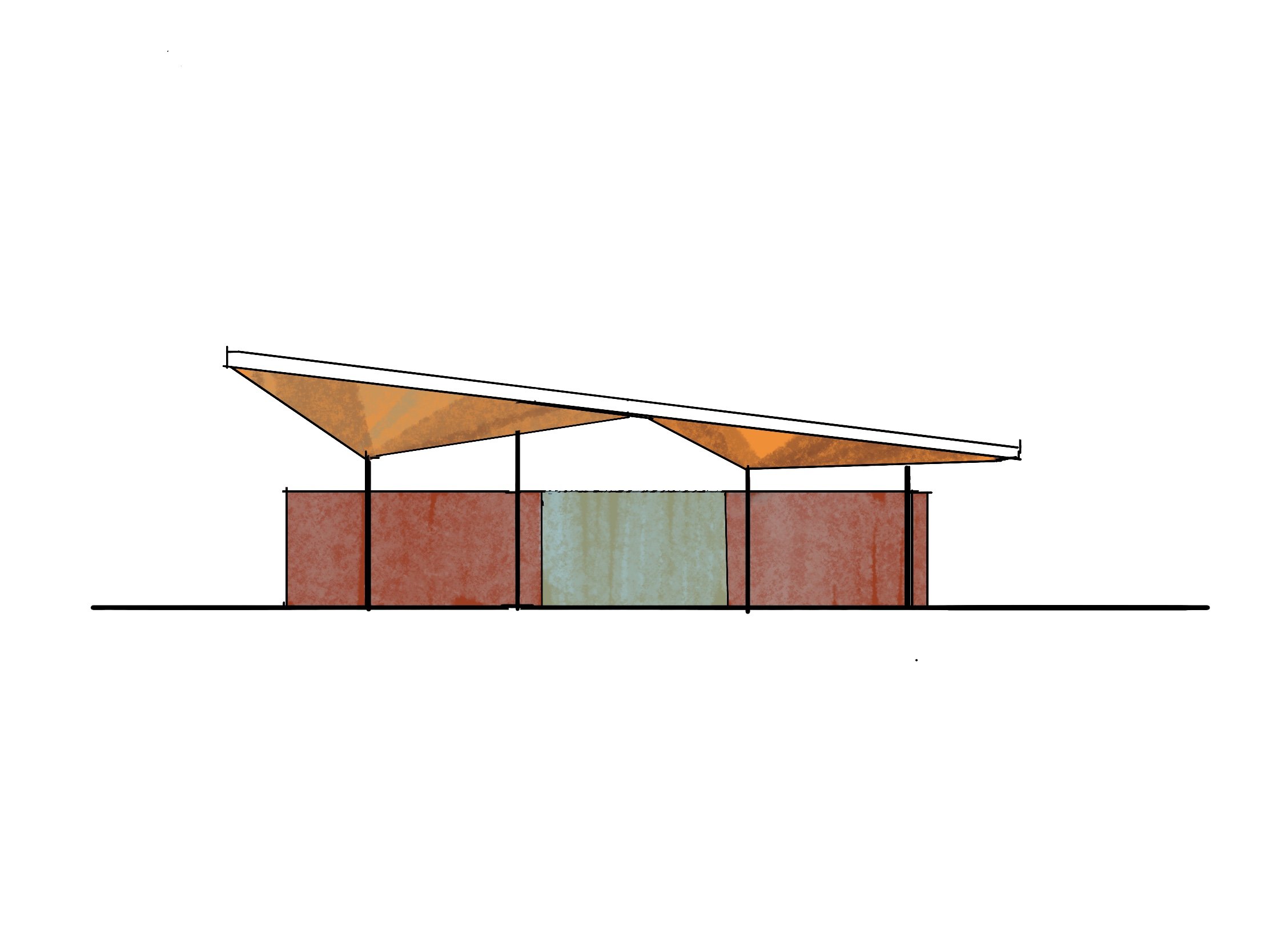

We can all be so easily be beguiled into believing in our own contribution and genius in creating these images, worse, most of us want to believe that we are good at something to the point we just don’t look any deeper at the process. Like I said to myself as I was on my Canopy sculptures run, “Killer prompts man, you sure know how to use your words to get results out of this AI”. But I also felt like I was being shown the work of many students operating under my vague direction and acting as a curator only. This is a different experience to getting into the mental space and putting marks in paper. It is a much more passive role with both a low mental effort and high dopamine reward - this should be a warning for all personalities with addictive traits. I can see dependency on the horizon.

So what has this network I was using, Stable diffusion, been trained with?

Answer: 2.3 billion images scraped from the web originally then a subsequent set of 600 million for upgrades, by now probably many more. All tagged with text tokens. An impractically huge undertaking for one human. No doubt as much of the process was automated as was possible but at it’s core many, many human hours went in to this excluding the time that went into the original creative works. But what image content was actually in the training set? Or equally valid, what was not in the training set? You may remember Google’s auto photo tagging incident from a few years ago, tagging some people as “Gorillas”. No prizes for guessing what was not in their training set.

As I looked lovingly at the suite of Ai images I generated, curated and filed under '“Hidden Buildings” I saw architectural styles and references to other buildings that did not come from me. Where did they come from? Who did I just rip off? I didn’t expect there would be any wholesale plagiarism but there were certainly echoes. Then I remembered the Architectural style references I put in my text prompts… Tadao Ando, Tom Kundig, both heroes of mine, were there (Sorry guys! Tadao, you can punch me, Tom we still good for lunch right?). The images did look not exactly like their work, not enough for anyone to say “Ha! You copied this!” and there were also clear Ai ‘accidents’ of interpretation that were visually delightful, but regardless, did they or any other creator have a say in whether they wanted to participate in a training set? “Scraped from the internet” means they were not. It means facebook posts, websites, blogs and anything accessible, you name it an automated piece of software (a bot) can take a copy of it (scrape) and then attach tokens to it for use in training an AI.

I tried to self justify - I saw the image, the image is unique, no-one else has seen it before, I think it has value, I could put it out into the world as my own work. But it isn’t mine really. It did not come from me, it came from the network. More specifically, the artists, photographers and architects that featured in the training set, plus the vagaries of the network’s unique paths and weightings. These things created the outputs. I was simply a donor of a new random seed for it to iterate over. I was hit by the simple internal statement, “This is not your work.” Buuuuut… No one else has seen these and do I not contribute by the act of curation, and the originality of my contributing sketch? Well yes I do but here’s the repeated clincher - None of the artists, photographers, designer’s and architects who’s work featured in the training set gave their permission for this kind of use.

My daughter is an artist and within her realm of digital art she has recently been disheartened by “inappropriate” use of Ai art. For example a very popular manga artist, Kim Jung Gi, recently passed away, yet through using AI some less sensitive users were able to reproduce art in his style, exactly in his style, right after his passing. Not influenced by or as a tribute to, just dead wringers. This upset many fans who vocalised their angst. The rebuttal to the upset fans has been in the form of a surly, “You artist’s whine too much. This guy’s art is in the public domain, so why shouldn’t we use it?”. There is also an undercurrent here of, shall we say artists vs non-artists. Ai allows those who don’t care to develop the necessary artistic skills, to produce high quality art, but clearly on the backs and shoulders of the originals who came first and ended up in a training set. There is a feeling of, “Hey artists, you’re not so special now eh?” a levelling of the playing field or a cheapening of the commodity? Personally I fear it’s the commodity. Again, I’m positive no-one asked him for permission to include his work in any training set.

Through the use of AI we have successfully managed to digitalize ‘style’. If you have a popular style that has a web presence this can now be misappropriated, be it photography, architecture, design, illustration, art, you name it. Get ready for it.

How do we find out what’s in a training set? Access to the Stable diffusion training set is not to my knowledge, publicly available. I came across a page from Waxy where they had gained access to 1.2 million images (out of 2.3 Billion est) of the training set and set up a word search facility. Quite revealing. Out of 1.2 million my style references got 52 hits for Tom Kundig and 0 hits for Tadao Ando (I’m outraged and relieved but no-doubt he would have turned up in the remaining 2.28 billion).

This is really is a new world with many new questions. I can see class actions on the horizon pursuing removal of individuals and firm’s work form training sets. I’m no lawyer but I think the horse has bolted on this and we now need to learn how to live in this new reality. We could however get on the front foot and try to legislate access to training sets, but as so much time and resources go into the training process this would be hard fought ground. We could also attempt to state on any stylistic post, that we categorically do not give permission for this work form part of an AI training set. it’s a start.

Is there any good news? Yes, I think so. The Stable diffusion interface I use allows image input as well as words. We can also direct specific regions of an image for the AI to rework. This allows me to do something like the images to the below in a few minutes.

The orthogonal renderings above were done after a little tweaking of the text prompts (including “timber cladding” and “black metal”). Time investment - original sketch 5 mins, Ai work 5 minutes - total 10 minutes. I will be experimenting with this technique in my regular practice.

Good news 2: the directed re-working of specific areas of an image combined with appropriate text prompts allows for quick generic image population as well:

The above image is not perfect but communicates the concept of surrounding activity quite well and certainly for less time investment than modelling+rendering+post production. The lighting and reflections are particularly impressive. This technique improves the Ai’s utility as a tool but there is still a large component of random chance to the outputs. Use with caution. - know how much scrutiny you image is going to come under. The more familiar readers will be able to identify the image above as an early version of Stable diffusion (1.4) due to the trademark mangling of some geometry and organic forms. This will only get better. I am downloading stable diffusion 2.1 onto my system as I write. Why? Like everyone else, I want to see what I can do with it.

Good news 3: Seeding existing imagery with simple photoshop/procreate work can yield useful results. See the example below:

Good news 4: Adding detail to your existing renders. Using a combination of directed AI reworking and some simple drawn/seeded elements you can quickly populate your renders with the detritus of life. The results can be difficult to control and there clearly is more control in modelling directly but look how well it does the plants and the crockery on the shelves after the roughly drawn-in prompts.

Beyond image generation, I think the training of neural nets combined with human input/oversight has the greatest promise. The design tasks we all love to hate, toilet layouts, car-parks, loading bays, residential yield plates? I think these are prime candidates.

I still dream of programming an algorithm in something like grasshopper (a Rhino based graphical programming interface) to design site approaches given my parameters, my chosen scoring methods and an evolutionary solver (this crunches through a range of selected parameters while monitoring your scoring criteria and gives you be top scores - can take a long time) but training an AI network would be a faster way to get to the results after the investment of training. A shortcut for which I would need to trade the certainty and transparency of my code for an inscrutable black box. The safety check here would be me reviewing the outputs and saying, “That’s interesting. It could work if we did this and this…”. Again, over-dependency looms.

This is where I start my go-fund-me for a year or two off to train the ultimate yield study designer AI right? What’s in the training set you ask? That’s the right question. Answer: All of the drawings and planning application/DA drawings I can get my hands on - all of it. Fellow designers, anyone feeling threatened right now? Perhaps you should.

I can see networks being trained to follow a practice’s particular approach on formulaic design, but due to liability concerns and the whole black box thing, the results would always need to be vetted by suitably insured humans. They could save us allot of time though and maybe allow us to focus on more effective value adds. Unfortunately the training an AI from scratch endeavour would seem on the face of it, too onerous (watch this space) but there are, built in tools within Stable diffusion to “train” one’s own network. These are commonly used to train an AI to produce one consistent result like the same face and is built upon the existing network so the moral concerns remain.

The truly intoxicating aspect of Ai imagery is the lack of limits. I had allot of fun one night sitting with a fellow architect, showing him what it could do. I have a studio Ghibli poster in my home office so we landed on trying “Totoro flying an x-wing fighter”. (image below)

He didn’t make it into the X-wing but other than that this one was knocked out of the park! It’s a keeper! Which demonstrates nicely the creative power of these tools. Their randomness can create images that trigger positive reactions and allow us to take new starting positions for creative development. At the same time, their randomness makes them very hard to direct in explicit directions. I can only imagine as the networks grow and evolve, this will become less of an issue., but at the moment it is still a throw of the dice. Dice that give little screams about other people’s work as they bounce and roll.

Casting a little forward, I can imagine an artisanal backlash generating disclaimers on creative works - “No AI used in the creation of this work”. But I suspect this would be limited to fellow creatives preaching to the choir. There is enough source data out there for the larger AI networks to just keep iterating (‘autonomous’ AI creative output is also a thing). Some technology aware clients may go on the offensive demanding full disclosure of the use of AI systems to protect against future liabilities (I would). Perhaps less so for purely creative endeavours and more for the Ai that recommends an unnecessary medical operation and cant tell you why, “you want to remove what?!”

“Well yes that’s what the computer says will cure your rash…”

“ Can you at least tell me why?”

“Ummm…”

I’m certain that our insurers are working on new clauses and exceptions as we speak.

As it stands right now I think if we decide to use this technology we do it transparently. The least we can do is to declare it and be explicit in declaring stylistic influences. We should also have a hard think about our ongoing contributions to the AI bot-scrapers.

Finally, we should acknowledge, that as mesmerising as it seems , these images and creations are accessible within our own personal super computers. Before we run off and stop sketching ever again, we should appreciate and honour the act of human creation and understand that the AI’s are, in fact, now reflecting this back at us.

We are awesome.

Scary, funny and disturbing but awesome.

Good luck everyone!